Data Observability and Data Quality are two key aspects of data management. The focus of this blog is going to be on Data Observability tools and their key framework.

The growing landscape of technology has motivated organizations to adopt newer ways to harness the power of data. Data is the driving force behind the key strategic decisions made by organizations. Here comes the role of the quality of data. Data management is integral to an organizational approach to filter quality data from ambiguous data.

What is Data Observability?

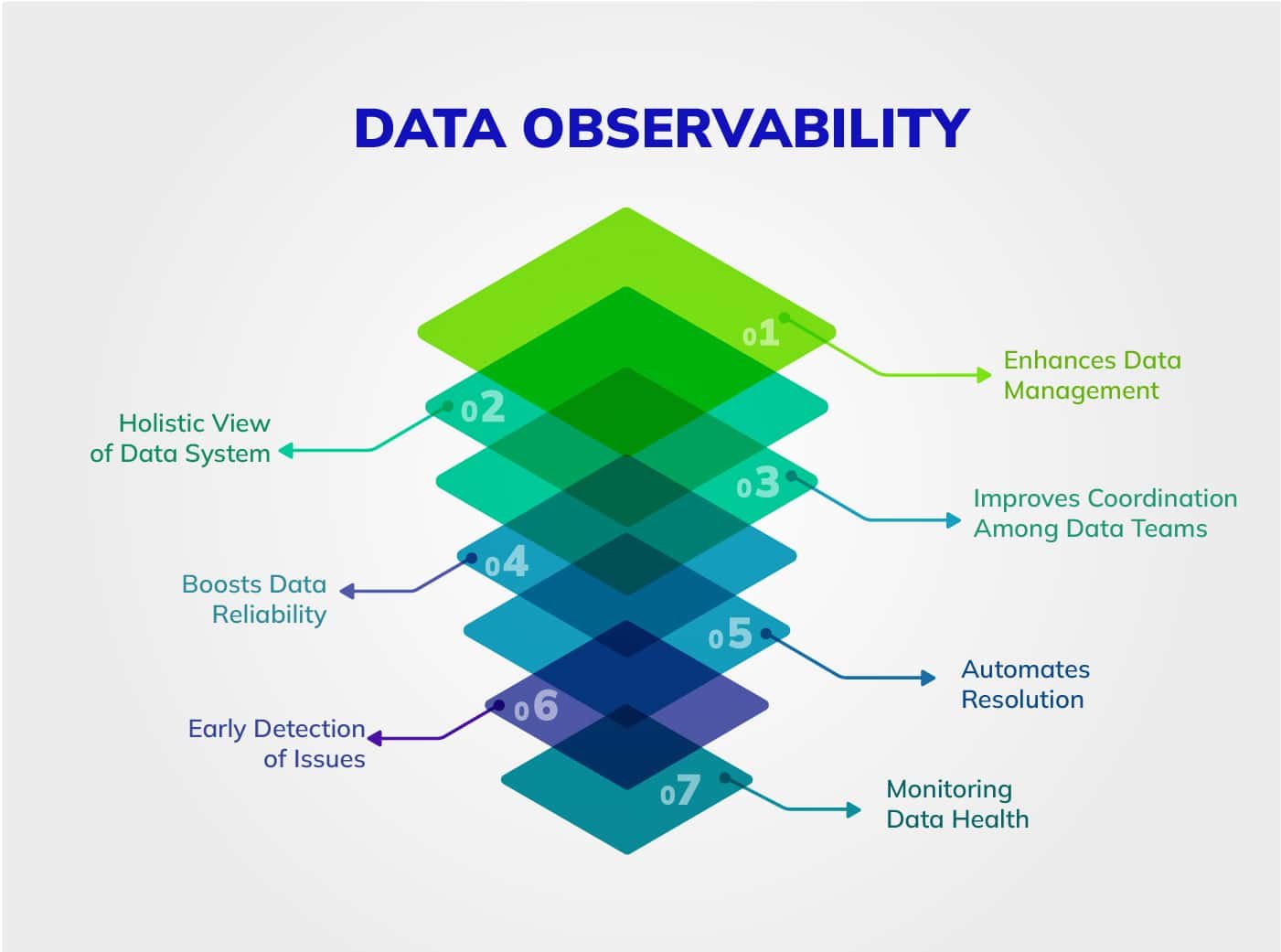

It is the practice of monitoring, tracking, and ensuring data quality, reliability, and performance as it moves through an organization’s data pipelines and systems. This process involves real-time monitoring and documentation to provide visibility on the data quality, thereby helping the organization detect and address data-related issues.

Data Observability is essential for maintaining data integrity, making informed decisions, and ensuring that data-driven processes run smoothly within an organization.

Data Observability vs. Data Quality

Data Observability

Data Observability focuses on monitoring and tracking data as it flows through pipelines, ensuring it remains accessible, reliable, and performs well. It emphasizes real-time visibility and the ability to detect and respond to issues promptly.

Data Quality

Data Quality, on the other hand, is a broader concept that encompasses data accuracy, completeness, consistency, and reliability. It addresses issues like missing values, duplicates, and inconsistencies in the data itself. Data quality tools help maintain high data quality standards.

Tools Used in Data Observability?

Tools are software solutions designed to monitor, track, and ensure the quality and reliability of data in an organization’s data pipelines and systems. These tools provide visibility into the data flow, allowing organizations to detect anomalies, assess data quality, and address issues in real-time. Examples of Data Observability tools include Datadog, Splunk, New Relic, and Dynatrace.

Best Data Observability Tools

Incorporate.io

It is a Data Observability platform that furnishes a comprehensive view of your system’s and data’s health. It comes with an intuitive email notification system that immediately prompts your team when a metric deviates from the norm, thus enabling swift actions to rectify the issue.

Key Features

- The tool is free to use for 3 years, thus making it economical for startups.

- Get 8 different alert types like Nulls, Cardinality, Median, Variance, Skewness, and Freshness

- The platform sends real-time notifications promoting effective management and resolution

- Helps you identify trends and underlying issues

Monte Carlo

It uses Machine Learning to scrutinize datasets. These data Observability tools can proactively detect anomalies, thus predicting issues before their actualization.

Key Features

- Benefit from the real-time surveillance thus, it helps in identifying potential issues in real-time

- It comes with advanced analytical capacities contributing to well-informed decision-making;

- Intuitively explore and grasp the intricacies of data.

Bigeye

Its analytical prowess and data visualization capabilities will help Data Scientists make effective data-driven decision-making. It facilitates the measurement, enhancement, and lucid communication of data quality.

Key Features

- The automated reporting feature allows you to easily share the data insights with the team.

- An intuitive dashboard, thus helping you keep track of data quality metrics.

- It is backed by sophisticated algorithms that empower the identification of budding data irregularities

- Simplify the amalgamation of data from diverse origins.

Data Observability Informatica

Informatica is a company that offers data integration and management solutions. While they provide various data-related tools, they may also offer features related to Data Observability within their platform. Informatica might enable organizations to monitor data flows and ensure data quality as part of their data management processes.

Key Features

- It has data profiling capabilities to analyze data quality and identify issues.

- You can continuously monitor data pipelines to detect anomalies and data quality issues.

- Define and enforce data quality rules and standards to ensure data accuracy and consistency.

10 Convincing Reasons That Your Organization Needs a Data Observability Platform

Your organization has been facing any of the following issues: switching to any of the popular Data Observability tools is needed. Here or a few of them

Data Quality Issues

If there have been constant data quality issues, in accuracy or incomplete data, which is disrupting the business processes.

Data Downtime

If the data pipelines are frequently experiencing downtime, which is causing a delay in data availability and analytics.

Data Discrepancies

Any inconsistency or ambiguity in data generated and data consumed can lead to confusion and distrust. In such a case, you need to integrate with the Data Observability platform.

Poor Data Governance

If the organization is facing issues in town visibility, modifying data thus impacts the data governance policy.

Compliance Challenges

If you struggle to adhere to data regulations and compliance practices, Data Observability tools can help.

Difficulty in Root Cause Analysis

Identifying the root cause of data issues is challenging, resulting in longer resolution times.

Data Complexity

Your data ecosystem is becoming increasingly complex, with data flowing from multiple sources, thus making it hard to track and manage.

Manual Monitoring Overload

You rely heavily on manual monitoring and alerting, which is time-consuming and error-prone.

Data Volume Growth

Data volume is rapidly increasing, making managing and monitoring data at scale difficult.

Complex Transformations

Data transformations are becoming increasingly complex; you need visibility into these processes.

Data Observability Examples

Data Observability ensures that the data is accurate, consistent, and trustworthy. Here are some examples of Data Observability in action:

Data Quality Monitoring

Organizations can set up automated checks to monitor data quality. For instance, they can detect missing values, outliers, or inconsistencies in datasets. If sales data suddenly shows a significant drop, Data Observability tools can alert data engineers to investigate the issue, which may uncover a problem with data collection or processing.

Data Lineage Tracking

Data Observability tools can visually represent how data flows through an organization’s systems. This lineage tracking helps in understanding the source of data and its transformations. For example, if a financial report shows incorrect numbers, Data Observability can trace the problem back to the specific data source or transformation step.

Anomaly Detection

By establishing baseline patterns of data behavior, Data Observability systems can detect anomalies or deviations from the norm. For example, a sudden increase in website traffic or a drop in social media engagement can be detected and investigated promptly.

Data Access Control

Data Observability also involves ensuring that only authorized personnel can access sensitive data. Access logs and permissions can be monitored to detect any unauthorized access or data security breaches.

Data Versioning

Maintaining a history of data changes and versions is crucial for Data Observability. This ensures that teams can trace back to previous data states if errors occur or if they need to reproduce past results.

Data Performance Monitoring

Observing the performance of data pipelines is essential. For instance, if a data pipeline is responsible for processing and delivering real-time financial data to trading systems, monitoring its latency and throughput can help ensure that trading decisions are based on the most up-to-date information.

Data Compliance and Governance

Data Observability plays a role in ensuring compliance with regulations like GDPR or HIPAA. Tools can monitor data usage to identify potential violations and ensure that data handling practices meet legal requirements.

Alerting and Notification

Data Observability systems can send alerts and notifications to data engineers, analysts, or other relevant personnel when issues or anomalies are detected. These alerts can be configured to trigger actions like pausing data pipelines or initiating investigations.

Root Cause Analysis

Data Observability tools can help identify the root causes when data issues occur. For example, if a report shows incorrect customer churn rates, observability tools can assist in pinpointing whether the issue stems from data collection, processing, or reporting.

Documentation and Metadata Management

Keeping thorough documentation and metadata about data sources, schemas, and transformations is key to Data Observability. This helps data users understand the context and lineage of the data they’re working with.

A Data Observability framework is a structured approach or set of practices and tools used to implement Data Observability within an organization. It typically includes data monitoring, alerting, documentation, and continuous improvement components.

Data Observability vs. Data Governance:

Data Observability:

Focuses on real-time monitoring and tracking of data to ensure its reliability and performance. It aims to address issues promptly as they arise.

Data Governance:

This broader discipline is concerned with establishing policies, standards, and processes for managing data throughout its lifecycle. It focuses on data quality, security, compliance, and data stewardship.

FAQs

What is The Purpose of Data Observability?

Data Observability ensures data pipelines operate smoothly by monitoring data quality, performance, and reliability. It helps detect and address issues promptly, ensuring trustworthy and actionable data for informed decision-making.

What are The Five Major Pillars of Data Observability?

The key pillars are:

- Data Quality: Ensuring accurate, complete, and reliable data.

- Data Availability: Guaranteeing data is accessible when needed.

- Data Performance: Optimizing data processing efficiency.

- Data Compliance: Ensuring adherence to data regulations.

- Data Lineage: Tracking data’s journey and transformations.

What is the difference between data ops and Data Observability?

Data Ops focuses on end-to-end data pipeline automation and collaboration, emphasizing data delivery speed. On the other hand, Data Observability concentrates on monitoring and ensuring data quality, reliability, and performance in existing pipelines, often working in tandem with Data Ops to enhance data operations.

Concluding Thoughts

Data Observability ensures that data remains accessible, reliable, and performs well as it flows through an organization’s data pipelines. It helps organizations promptly detect and address data issues, improving data quality and decision-making.

The scope of data skills is expanding. Data skills are finding applications everywhere, from assessing the quality of data to ensuring compliance with data management. Organizations are relying on data-driven decisions. Hence, acquiring the skill sets in this domain will help progress career growth.

Pickl.AI provides a comprehensive learning platform wherein one can acquire data science skills. The courses range from foundation courses for beginners to Data Science job preparation programs. These courses are designed by industry experts, helping you acquire job-ready skills. For more information on this, log on to Pickl.AI