Summary: Weights and biases are key to how neural networks learn, adapt, and improve. They help AI models make smart decisions in tasks like image recognition and NLP. Understanding these elements unlocks the foundation of data science and machine learning.

Introduction

In Artificial Intelligence (AI), neural networks power everything from voice assistants to self-driving cars. But what exactly makes these networks learn and improve over time? The answer lies in two key building blocks: weights and biases.

In this easy-to-understand guide, we will explain what weights and biases are, why they matter, and how they help machines make smart decisions. We’ll also walk through how these two components work behind the scenes in popular technologies like image recognition and natural language processing.

Key Takeaways

- Weights assign importance to each input in a neural network, influencing decision-making.

- Biases add flexibility by allowing neurons to activate under varied conditions.

- Neural networks learn by adjusting weights and biases through forward and backward propagation.

- Real-world AI, like image recognition and NLP, relies on smart tuning of weights and biases.

- Mastering weights and biases is foundational to building a strong data science skillset.

Understanding Weights: The Core Decision-Makers

Imagine a neural network as a giant web made of tiny decision-makers called neurons. Paths connect these neurons, and every path has a number attached to it. That number is called a weight.

Weights decide how important one piece of information is compared to another. The network sees that information as very important if the weight is large. If the weight is small, the network pays less attention to it.

Let’s say you’re training a neural network to recognize handwritten digits. Each pixel in an image of a digit is an input. The weights connected to each pixel help the network decide how much it should “listen” to that pixel when guessing the digit.

If some pixels consistently help the network get the answer right, their weights increase. If others don’t help, it lowers their weights.

Over time, by adjusting these weights, the network learns which features matter most for the task at hand. In short, weights are how neural networks learn from data.

Biases: Adding Flexibility to Learning

While weights adjust the importance of inputs, biases help the network stay flexible. Every neuron in a neural network also has a bias, a number added to its total before making a decision.

Why do we need biases? Imagine trying to fit into a pair of shoes without loosening the laces—you might not get the perfect fit. Biases are like those laces; they let the network make tiny adjustments to fit the data just right.

Let’s take a simple example. Suppose a neuron should “turn on” when brightness is at 5. But real-world data is never perfect—it might be 4.8 or 5.2. The bias lets the neuron still activate, making the model more flexible and accurate.

Just like weights, biases are also updated during training to help the network give better results. Together, weights and biases help the network respond to data smartly and flexibly.

Training the Network: From Predictions to Corrections

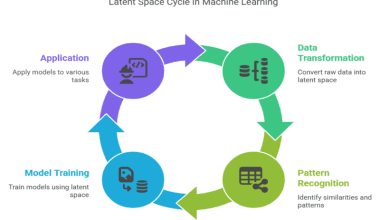

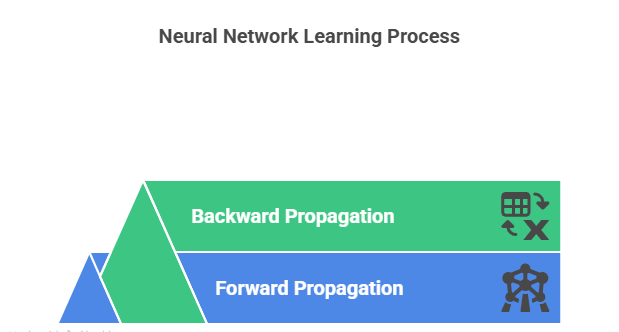

Once we understand the roles of weights and biases, we can look at how the learning process works. Neural networks learn in two steps: forward propagation and backward propagation.

Forward Propagation: Making a Prediction

This is the phase where the network takes input and makes a guess.

- Input Comes In: The input data (like an image or a sentence) enters the network.

- Weights in Action: Each neuron calculates a total using the inputs and their weights.

- Biases Added: The bias is added to this total to allow flexibility.

- Activation: The result goes through a function to decide if the neuron should “activate.”

- Pass It On: This output is sent to the next layer, and the process continues until the network gives its final answer.

Backward Propagation: Learning from Mistakes

After the network makes a prediction, it checks how close it was to the correct answer.

- Error is Calculated: The network compares its answer to the real one.

- Finding What Went Wrong: It figures out how each weight and bias affected the error.

- Making Adjustments: The network then tweaks the weights and biases to reduce future mistakes.

- Repeating the Process: This happens repeatedly until the network gets really good at its task.

Thanks to weights and biases, the network learns by improving itself with every example.

Real-World Applications: Where Weights and Biases Make Magic Happen

Now that we know how weights and biases work, let’s see them in action in real-life situations.

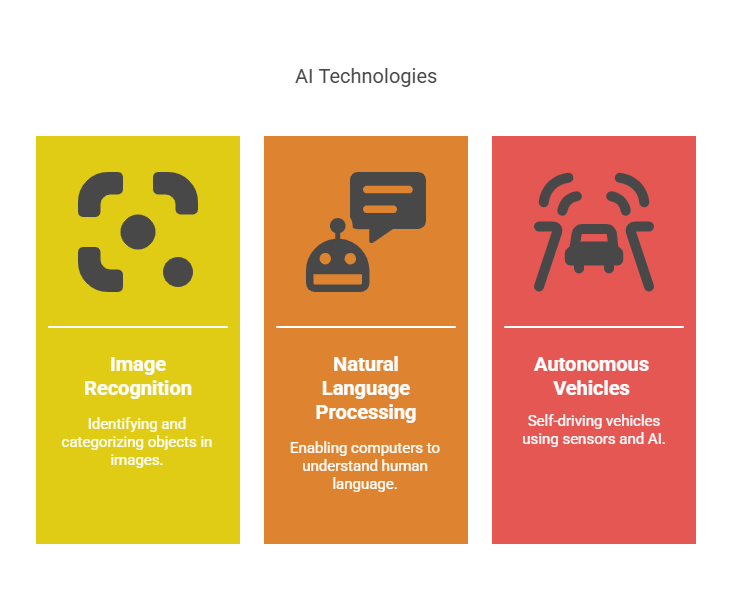

Image Recognition: Seeing Like a Human

Imagine an app that can recognize whether a photo has a cat in it. It looks at each pixel and tries to figure out if those pixels match what a cat usually looks like.

Weights help the network pay more attention to important features, such as whiskers, ears, or tail shapes. If a certain group of pixels usually means “cat,” their weights increase.

Biases help the app be more flexible. Thanks to smart bias adjustments, the app can still recognise a cat even if it is in a shadow or wearing a hat (yes, cat hats are a thing).

Natural Language Processing (NLP): Understanding Words

Neural networks are also great at understanding text. They help power chatbots, virtual assistants, and even spam filters.

Let’s say you’re building a model to detect whether a movie review is positive or negative. Words like “great” or “boring” are key signals.

Weights decide which words matter most. For example, “loved” might have a high weight for positive reviews, while “terrible” might be important for negative ones.

Biases help the network adjust to different writing styles, slang, or grammar mistakes. They ensure that the model still understands the meaning, even when people write differently.

Autonomous Vehicles: Driving Smart and Safe

Self-driving cars rely on neural networks to stay safe on the road. These networks process images, radar, and other sensor data to detect pedestrians, signs, and other vehicles.

Weights help the car know what to focus on. For example, if a person’s outline appears in front of the car, the weights attached to those features tell the network, “Pay attention!”

Biases help handle different driving conditions, such as fog, darkness, or unusual angles. Even if the data isn’t perfect, biases allow the network to make good decisions.

Summing It All Up

Weights and biases are how neural networks learn and make decisions. Weights decide the importance of each piece of input data, while biases provide the flexibility needed to deal with real-world unpredictability.

Whether it’s spotting a cat in a photo, understanding a customer review, or helping a car drive itself, weights and biases work silently in the background to make AI smarter and more useful.

Understanding these simple yet powerful concepts opens the door to grasping how modern AI truly functions. This is the perfect place to start for anyone curious about machine learning. Take the next step by joining data science courses of Pickl.AI to dive deeper into these concepts and build real-world skills for the AI-driven future.

Frequently Asked Questions

What are weights and biases in neural networks?

Weights and biases are core elements of neural networks. Weights determine the importance of inputs, while biases allow flexibility by shifting the activation threshold of neurons. Together, they help the network learn patterns from data during training.

Why are weights and biases important in data science?

In data science, understanding how models learn from data is crucial. Weights and biases enable models to make accurate predictions by adjusting to patterns and noise in real-world data. They form the basis of how algorithms like deep learning perform intelligent tasks.

How are weights and biases adjusted during training?

Weights and biases are updated using a process called backpropagation. After making a prediction, the model calculates the error, then adjusts the weights and biases to reduce future mistakes. This learning loop continues until the model achieves high accuracy.