Summary: Continual learning is an AI approach where models incrementally learn from a continuous stream of changing data without forgetting previous knowledge. It addresses challenges like catastrophic forgetting, enabling AI systems to adapt efficiently to new tasks and dynamic environments, improving scalability and real-world applicability across diverse domains.

Introduction – What Is Continual Learning?

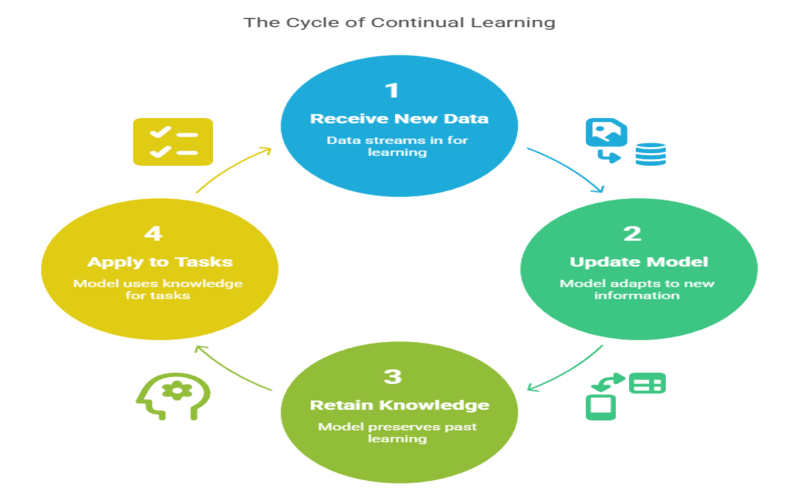

Continual learning, also known as lifelong or incremental learning, is an evolving paradigm in artificial intelligence (AI) and machine learning where models learn continuously from a stream of data or tasks over time.

Unlike traditional machine learning models that are trained once on a fixed dataset, continual learning systems adapt dynamically, incorporating new knowledge without forgetting previously learned information. This ability mimics human learning, where knowledge accumulates and evolves throughout life.

At its core, continual learning addresses the challenge of learning from nonstationary data—data whose distribution changes over time—and sequential tasks that arrive one after another. Instead of retraining models from scratch whenever new data appears, continual learning enables models to update incrementally, improving efficiency and adaptability in real-world applications.

Key Takeaways

- Continual learning enables AI to learn new tasks while retaining prior knowledge effectively.

- It addresses catastrophic forgetting, a major challenge in sequential task learning.

- Models adapt incrementally to nonstationary data without retraining from scratch.

- Continual learning improves AI scalability across multiple evolving tasks and domains.

- Inspired by human learning, it enhances AI flexibility and real-world relevance.

Why Continual Learning Is Important in AI

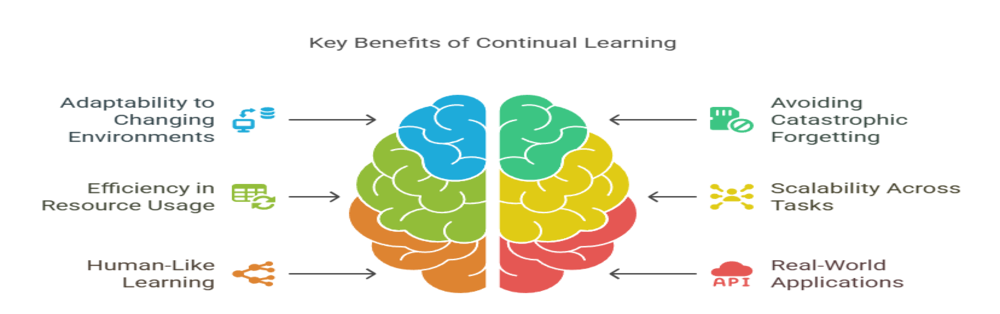

Continual learning is vital in AI because it enables models to adapt continuously to evolving data and environments, much like humans do. This adaptability ensures sustained performance despite changing conditions. It also prevents catastrophic forgetting, where models lose prior knowledge when learning new tasks.

- Adaptability to Changing Environments: AI systems must operate in dynamic contexts where data evolves continuously. It allows models to adapt to new conditions, ensuring sustained performance.

- Avoiding Catastrophic Forgetting: Traditional models often forget old knowledge when trained on new data. Its techniques mitigate this issue by preserving prior knowledge while learning new information.

- Efficiency in Resource Usage: Retraining models from scratch is computationally expensive and time-consuming. It reduces this overhead by updating models incrementally.

- Scalability Across Tasks: Instead of building separate models for every new task, it enables a single model to accumulate knowledge across multiple tasks or domains.

- Human-Like Learning: Mimics how humans learn—building on past experiences without losing them—making AI systems more natural and effective.

- Real-World Applications: Many practical AI applications, such as robotics, autonomous driving, and personalized assistants, require ongoing learning to handle new scenarios and user preferences.

Challenges in Continual Learning

Continual learning presents significant challenges that differentiate it from traditional machine learning. Unlike static models trained on fixed datasets, continual learning systems must adapt to nonstationary data streams where information changes over time.

- Catastrophic Forgetting: The most significant challenge where learning new tasks causes a model to overwrite or forget previously acquired knowledge.

- Data Distribution Shifts: Handling changes in data distribution over time (nonstationarity) without access to all past data.

- Limited Memory and Storage: Storing all historical data for retraining is often infeasible due to privacy, storage, or computational constraints.

- Task Interference: When learning multiple tasks sequentially, updates for one task may degrade performance on others due to conflicting parameter changes.

- Balancing Stability and Plasticity: Maintaining a trade-off between retaining old knowledge (stability) and learning new information (plasticity) is complex.

- Evaluation Complexity: Assessing performance across multiple tasks and over time requires sophisticated benchmarks and metrics.

- Scalability of Models: As tasks increase, models must efficiently scale without becoming too large or slow.

Challenges in Continual Learning – Introduction

It enables AI models to learn from a continuous stream of data and adapt to new tasks over time. However, this dynamic learning process introduces several significant challenges that complicate model training and deployment.

These challenges arise from the need to retain previously learned knowledge while effectively incorporating new information in environments where data distributions change unpredictably. Understanding these obstacles is crucial for developing robust, efficient, and scalable continual learning systems.

- Catastrophic Forgetting: Models tend to overwrite previously learned knowledge when trained on new tasks, leading to significant performance drops on earlier tasks.

- Nonstationary Data Distributions: Data streams often shift over time, requiring models to adapt without access to all past data.

- Limited Memory and Computational Resources: Storing all historical data for retraining is impractical due to privacy, storage, and resource constraints.

- Task Interference: Learning multiple tasks sequentially can cause conflicts in parameter updates, degrading performance on some tasks.

- Class-Incremental Learning Complexity: Introducing new classes over time challenges models to maintain discrimination across all learned classes.

- Balancing Stability and Plasticity: Models must retain old knowledge (stability) while remaining flexible enough to learn new information (plasticity).

- Evaluation Difficulties: Measuring performance across multiple tasks and evolving data streams requires sophisticated metrics and benchmarks.

- Noise and Outlier Handling: Models must identify and ignore irrelevant or erroneous data that can mislead learning.

Applications of Continual Learning

Continual learning is increasingly vital for AI systems that must operate in dynamic, real-world environments where data and tasks evolve over time. Unlike traditional static models, it enables AI to adapt continuously, improving performance and relevance across diverse applications.

- Robotics: Robots continuously acquire new skills and adapt to changing surroundings without forgetting previously learned tasks, enabling versatile and long-term operation in unstructured environments.

- Autonomous Vehicles: Self-driving cars update their perception and decision-making models to handle new traffic scenarios, weather conditions, and road layouts, ensuring safety and reliability.

- Personalized Assistants: Virtual assistants learn from ongoing user interactions, adapting to evolving preferences, languages, and contexts to provide more accurate and personalized responses.

- Healthcare: Diagnostic and treatment models incorporate new medical research and patient data over time, improving accuracy and enabling personalized medicine.

- Natural Language Processing (NLP): Language models improve continuously by learning from new linguistic patterns, dialects, and user-generated content, enhancing understanding and generation capabilities.

- 3D Vision and Semantic Segmentation: In robotics and computer vision, continual learning supports real-time adaptation to new objects and environments, essential for tasks like scene understanding and navigation.

Continual Learning vs Transfer Learning vs Online Learning

Understanding the distinctions between continual learning, transfer learning, and online learning is essential for selecting the right approach in AI applications. Each method addresses different challenges related to how models learn and adapt over time.

Continual Learning

- Involves sequentially training a model on a stream of tasks or data, where the model must retain previously learned knowledge while acquiring new information.

- Designed to handle nonstationary data distributions and an unknown number of future tasks.

- Prevents catastrophic forgetting, where learning new tasks causes the model to lose performance on earlier tasks.

- Inspired by human neuroplasticity, continual learning enables models to adapt continuously in dynamic environments without retraining from scratch.

- Example: Learning to recognize multiple languages sequentially, maintaining proficiency in all.

Transfer Learning

- Focuses on leveraging knowledge from a pre-trained model on one task to improve learning on a related but different task.

- Typically involves fine-tuning a model trained on a large dataset (e.g., ImageNet) for a new, often smaller, dataset.

- Does not inherently handle sequential task learning or forgetting; it’s a one-time knowledge transfer to accelerate training.

- Useful when data for the new task is limited and training from scratch is costly.

- Example: Using a model trained on general object recognition to identify specific dog breeds.

Online Learning

- Involves incrementally updating a model with each new data point or small batch as it arrives, often assuming data distributions change slowly or remain stationary.

- Enables models to adapt in real-time or near-real-time without retraining on the entire dataset.

- Typically focuses on continuous improvement rather than learning distinct tasks sequentially.

- Widely used in dynamic environments like recommendation systems, fraud detection, and adaptive control.

- Example: Updating a spam filter continuously as new emails arrive.

Key Differences Summarized

- Continual Learning: Sequential multi-task learning with retention of prior knowledge and adaptation to changing data.

- Transfer Learning: One-time transfer of knowledge from a source task to a related target task to speed up training.

- Online Learning: Continuous incremental updates to the model as new data arrives, focusing on data stream adaptation.

Tools and Frameworks Supporting Continual Learning

It is a rapidly evolving field, and several tools and frameworks have emerged to support researchers and practitioners in developing and deploying models capable of learning continuously without forgetting. These frameworks provide essential building blocks, algorithms, and infrastructure to implement various continual learning strategies efficiently.

TensorFlow

Developed by Google Brain, TensorFlow is a widely used open-source machine learning framework that supports scalable and flexible development of deep learning models. Its extensive ecosystem, including TensorFlow Extended (TFX), supports production pipelines that can incorporate continual learning workflows.

Avalanche

Avalanche is a PyTorch-based open-source library specifically designed for continual learning research and experimentation. It provides a rich set of benchmarks, datasets, and state-of-the-art continual learning algorithms, including replay methods, regularization, and parameter isolation techniques.

CLFace Framework

CLFace is a specialized continual learning framework developed for lifelong face recognition, addressing key challenges like catastrophic forgetting and resource constraints. It uses feature-level distillation and geometry-preserving techniques to maintain and incrementally extend learned knowledge without requiring full retraining or storing past data.

The Future of Continual Learning in AI

It promises to make AI systems more intelligent, resilient, and aligned with human learning processes.

- Advanced Algorithms: Research continues to improve methods that better balance stability and plasticity, reduce forgetting, and handle complex, real-world task sequences.

- Integration with Reinforcement Learning: Combining continual learning with reinforcement learning will enable agents to adapt policies in real-time to dynamic and uncertain environments.

- Scalable and Flexible Architectures: Models will grow dynamically with task complexity and data volume, becoming more efficient and capable.

- Cross-Domain Knowledge Transfer: Future models will transfer knowledge across vastly different domains while preserving task-specific expertise.

- Human-AI Collaboration: It will enable AI systems to learn interactively with humans, adapting to feedback and evolving needs in personalized ways.

- Ethical and Privacy Considerations: Ensuring continual learning respects data privacy, fairness, and robustness as models evolve over time will be critical.

Frequently Asked Questions

What Is the Meaning of Continual Learning?

Continual learning is an AI approach where models learn continuously from new data or tasks over time, retaining previously learned knowledge without forgetting.

What Is an Example of Continual Learning?

A personal assistant updates its understanding of a user’s preferences as interactions accumulate over months, without losing earlier knowledge.

What Is the Difference Between Continual Learning and Incremental Learning?

Incremental learning often refers to learning from new data batches sequentially, while continual learning emphasizes learning multiple tasks over time without forgetting.