Summary: Batch size in deep learning controls how much data a model processes before updating. It impacts training speed, memory, and accuracy. Understanding it helps improve model performance. Learn how steps, epochs, and batch size work together and how to choose the right batch size for your deep learning project.

Introduction

If you’ve ever trained a deep learning model or even just heard the term thrown around, you’ve likely come across the word batch size. But what does it actually mean? Why does it matter? And how do you choose the right batch size for your model?

Don’t worry if you don’t have a technical background. In this blog, we’ll explain everything about batch size in deep learning in simple, everyday language. Let’s begin.

Key Takeaways

- Batch size in deep learning is the number of data samples processed before updating the model.

- It affects training speed, memory use, and overall model accuracy.

- Steps, epochs, and batch size in deep learning work together to control training flow.

- The right batch size depends on hardware, data size, and learning goals.

- You can start learning data science basics and advanced AI concepts with Pickl.AI courses.

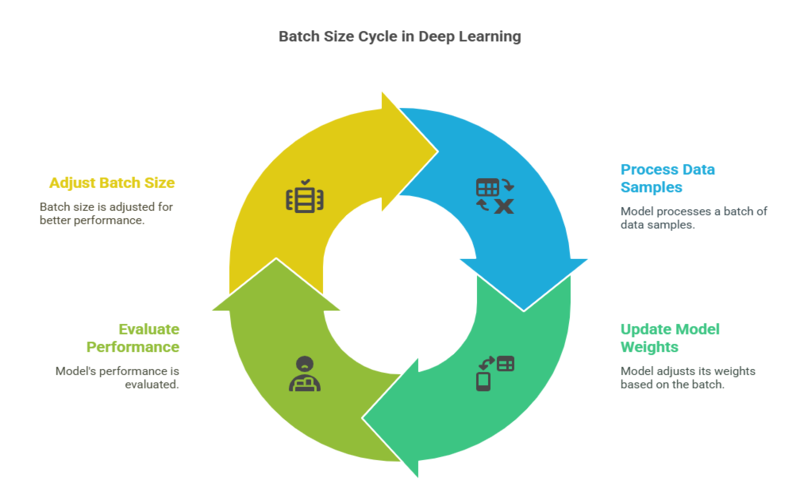

What is Batch Size in Deep Learning?

Imagine you have a huge pile of exam papers to check. Instead of going through all 1,000 papers at once, you decide to grade 100 at a time. You grade 100, take a break, and continue with the next 100. That’s how batch size works in deep learning.

In simple terms, batch size in deep learning is the number of data samples the model processes before updating its internal settings (also called weights). It’s like breaking a large task into smaller, more manageable parts.

If your model has to learn from 10,000 images, and your batch size is 100, it will complete 100 images at a time and learn from them before moving on to the next set of 100.

What is Batch Size in Neural Network?

When we talk specifically about neural networks, batch size becomes even more important.

A neural network is a type of computer model designed to mimic the human brain. It learns from examples by adjusting the strength of connections between its artificial “neurons.” But it can’t learn everything at once.

So, what is batch size in neural network terms?

Think of it like feeding a child one spoonful at a time. If you feed too much at once (large batch size), the child may not digest well. If you feed too little (very small batch size), the child takes forever to finish the meal. Batch size helps the model “digest” data efficiently and learn better.

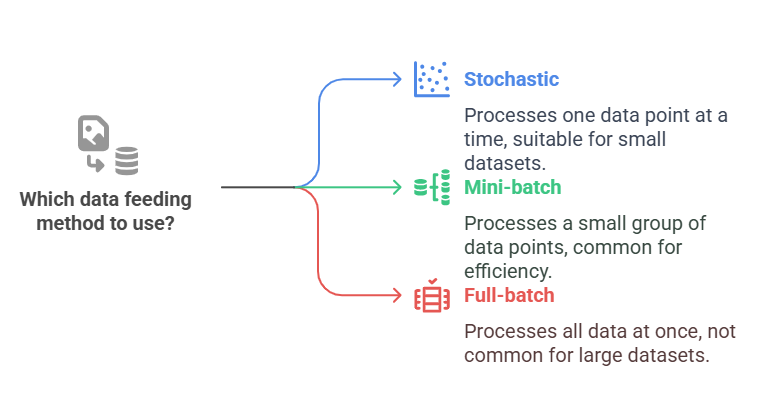

There are three main ways of feeding data:

- Stochastic: One data point at a time (batch size = 1)

- Mini-batch: A small group of data points at a time (common)

- Full-batch: All data at once (not common for large datasets)

Mini-batches are the most popular because they offer a good balance between learning speed and memory use.

What Are Steps, Epochs, and Batch Size in Deep Learning?

Now let’s break down another common confusion: what are steps, epochs, and batch size in deep learning?

These three terms work closely together.

- Batch Size: Number of samples processed at once

- Epoch: One complete pass through the entire dataset.

- Step: One update based on one batch.

Let’s take an example:

Suppose you have 1,000 training samples and your batch size is 100.

- It will take 10 batches to go through all 1,000 samples.

- So, 10 steps = 1 epoch.

- If you train for 5 epochs, the model will go through the data 5 times (i.e., 50 steps).

A simple formula:

Understanding these basics helps in designing better training schedules for deep learning models.

How Batch Size Impacts Model Training

Choosing the right batch size is important because it affects:

Speed of Training

Larger batches make the training faster because the model processes more data at once. But too large a batch may need more memory than your computer or GPU can handle.

Memory Usage

Small batches require less memory, making them great for limited hardware. Large batches can crash your system if memory runs out.

Model Accuracy

Small batch sizes may make the model learn slowly but more carefully. Large batch sizes can make training unstable or less accurate.

So, batch size in deep learning is not just a technical setting—it directly affects how well and how fast your model learns.

How to Choose Batch Size

Now comes the big question: how to choose batch size?

There’s no one-size-fits-all answer, but here are some helpful tips:

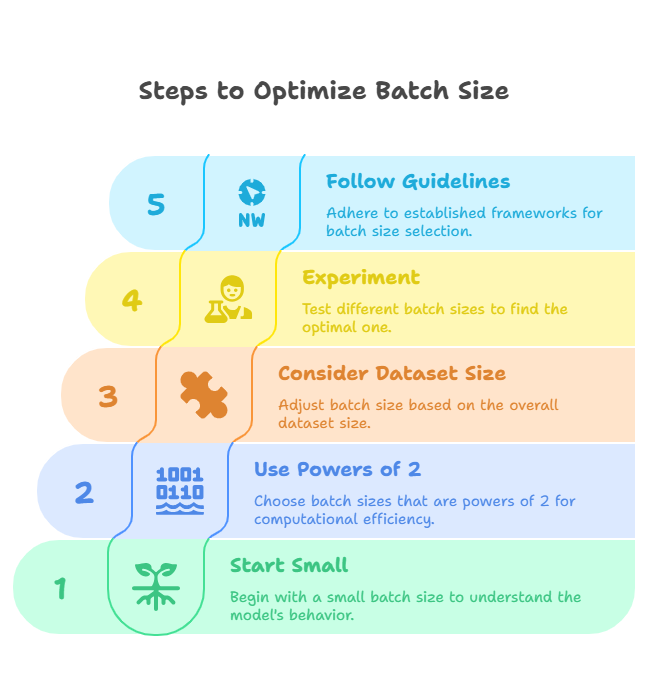

Start Small

If you’re working with limited hardware (like a laptop), start with a small batch size such as 16 or 32. This prevents crashes.

Use Powers of 2

Batch sizes like 32, 64, 128, or 256 are common. These work well with most hardware and are fast to compute.

Consider Dataset Size

For smaller datasets, small batches work better. For large datasets, try increasing the batch size gradually to speed things up.

Experiment

What works for one problem may not work for another. Try different sizes and monitor your model’s accuracy and loss during training.

Follow Framework Guidelines

Popular deep learning libraries like TensorFlow and PyTorch often provide default batch sizes that work well for many cases. Use those as a starting point.

So, when you’re wondering how to choose batch size, think about your hardware, data size, and what matters more to you: speed or accuracy.

Real-world Examples and Best Practices

Let’s look at how batch size is used in the real world.

Example 1: Image Recognition

Many researchers use batch sizes between 32 and 128 in an image classification project with 50,000 pictures. This size offers a balance between training time and accuracy.

Example 2: Medical Data

For sensitive and smaller datasets like MRI scans, batch sizes are often kept low (8–32) to ensure careful learning and better generalization.

Example 3: Online Learning

When data keeps coming in (like live stock market prices), very small batch sizes or even batch size = 1 (stochastic) is used for real-time learning.

Best Practices:

- Always monitor your model’s training loss and accuracy.

- Try multiple batch sizes to compare results.

- If the training is too slow, increase the batch size.

- If the model overfits or fluctuates wildly, try reducing the batch size.

Rounding Up

Understanding batch size in deep learning is key to building efficient and accurate AI models. It affects training speed, memory use, and how well your model performs. Whether you’re working with images, text, or real-time data, choosing the right batch size makes a huge difference. If you’re just starting out, don’t worry—experiment and learn.

Want to dive deeper into such exciting concepts? Begin your journey in AI by joining expert-led data science courses at Pickl.AI. Their hands-on approach and industry projects make complex topics simple and practical for all. Start learning today and become future-ready!

Frequently asked questions

What is batch size in neural network training?

Batch size in neural network training refers to the number of samples the model processes before updating its weights. It helps balance training speed and memory use, directly impacting how efficiently and accurately the model learns from data.

What are steps, epochs, and batch size in deep learning?

In deep learning, batch size is the number of samples processed at once. An epoch is one complete pass through the dataset, and steps are the number of batches per epoch. Together, they control how and when the model updates during training.

How to choose batch size for a deep learning model?

To choose batch size, consider hardware limits, dataset size, and model accuracy. Start with small powers of 2 like 32 or 64. Experiment and observe the model’s performance to find the best fit for your specific project or dataset.